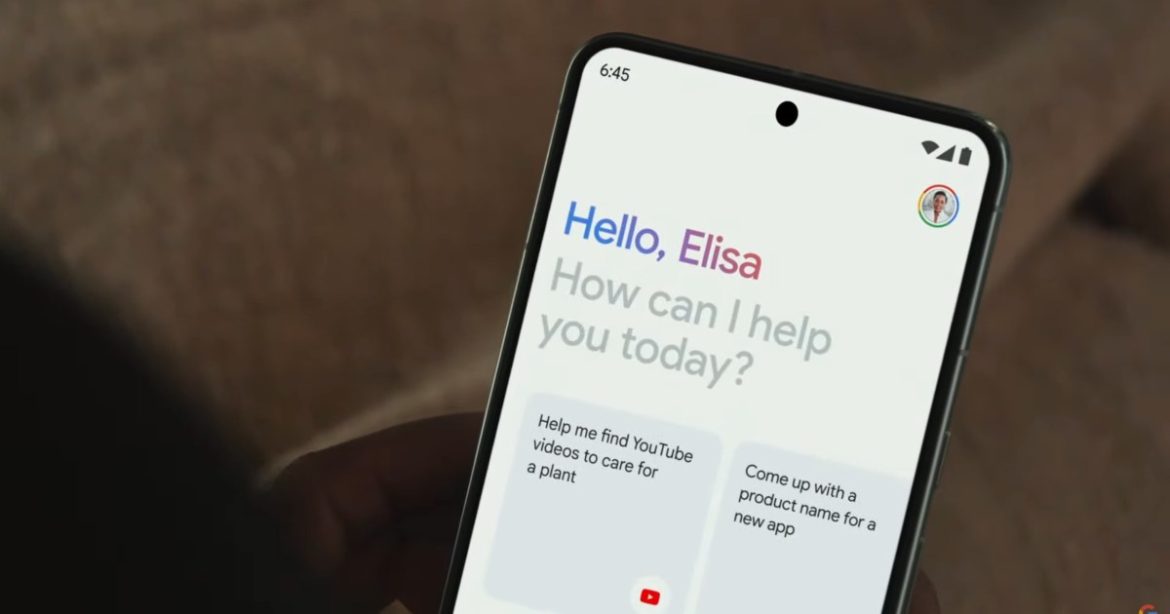

Google announced Thursday that it is releasing Gemini 1.5 Flash, it’s snack-sized large language model and ChatGPT-4o mini competitor, to all users regardless of their subscription level.

The company promises “across-the-board improvements” in terms of response quality and latency, as well as “especially noticeable improvements in reasoning and image understanding.”

Google initially released Gemini 1.5 Flash in May as a lighterw-eight version of its flagship Gemini 1.5 Pro model. It’s designed to perform less resource intensive inference tasks faster and more efficiently than Pro does, much as Claude 3.5 Haiku, Llama 3.1-8B and ChatGPT-4o mini do for their respective parent models.

Flash’s context window is drastically expanding with this update, growing from a paltry 8K length to 32K (roughly 50 pages of text). Granted, that’s a 4x increase in size, but even at 32K, Gemini Flash’s context window is still just a quarter the size of GPT-4o mini’s 128K window (or, about a book’s worth).

What’s more, Google plans to “soon add” the ability to upload text and image files, either from Google Drive or the local hard drive, direct to the context window. This feature was previously restricted to the subscription tiers.

The company also announced updates on its efforts to reduce instances of hallucinations within its models. Google plans to include “links to related content for fact-seeking prompts in Gemini,” essentially providing links to the sources it cites.

The AI will do this for both traditional search and for associated Workspace apps as well. If the AI uses information gleaned through its Gmail integration, it will provide links back to the relevant emails.

These updates are available immediately to nearly all Gemini users on both web and mobile and in 40 languages. Teens who meet the minimum age requirements needed to manage their own Google accounts will receive access next week.